Little did I know that these many databases actually exists like Cassandra’s or how they keep evolving all the while serving different applications. The closest or the even first time I’ve ever had hands on was SQL until a few months ago. It was one popular name that occurred through my school college and at work in the disguised form of DB2.

Little did I know that these many databases actually exists like Cassandra’s or how they keep evolving all the while serving different applications. The closest or the even first time I’ve ever had hands on was SQL until a few months ago. It was one popular name that occurred through my school college and at work in the disguised form of DB2.

Now, that I’m exploring my way into Big data the first thought that started to hit me was “How different is this from SQL”. Of course, the SQL and NO SQL databases debate tend to stay alive forever, here’s to follow are the substantial observations occurred to me.

I used Mongo DB in one of my projects only because it is a popular one without even knowing what really NO SQL database means. So, I tried to compare with that as well.

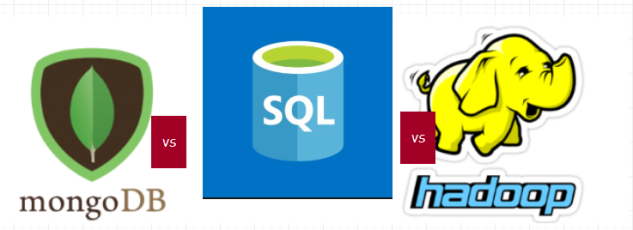

The first one is the while transferring data within SQL databases for various reasons including backup or other redundancy

It has to match the exact schema in order to copy. Which I mean it requires exact structure matching the tables like columns, data types, index(maybe) and what not. Otherwise, It’s simply gonna reject it.

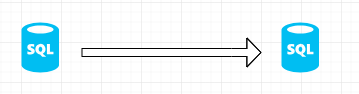

Hadoop which is known for the obvious capability to handle unstructured data does the job without a fuss on what or how the data looks like. It is the responsibility of the programs to adhere to the sensitivity and structure of data that is going to be handled.

Since it the data is unstructured (Ex: Twitter data) in Hadoop there is no such thing as table structure. In fact, the moment the data enters into the HDFS – File system of Hadoop, It will be replicated in multiple data nodes. This may seem illogical as multiple replicas of data may look inefficient, but it is the secret behind the selling point of Hadoop.

It supports extended scalability. Like the indefinite amount of Big data.

Consider an example where you have to analyze Twitter data generated for the entire period or even such as the FIFA world cup, Olympics or even the US elections. We can find out the keywords or emotions/opinions/ that is expressed in a majority or minority of people tweets.

So the even if this massive data is replicated over several nodes, The program for Hadoop(Java/Python/Or any) will only process and work only on a part of it. The successful results from the program are further reduced to yield more accurate results. S

So even if there is a problem or a breakdown with the nodes crashing, the Hadoop would provide immediate consistent answers.

Whereas, the SQL follows a 2 phase commit. ( Big fan of rollback option though).

*

Two-phase commit

It is a distributed algorithm that coordinates all the processes that participate in a distributed atomic transaction on whether to commit or abort the transaction.

*

It releases the results only if the query transaction is complete. Of course, both make more sense with respect to the applications they’re deployed on. Like how SQL would be more appropriate in banking transactions where ROLLBACK comes handly.

YESS!! THE COMPLEXITY AND RELIABILITY depend ON THE PROGRAM NATURE OF HADOOOOP!!!

Fun Fact : Hive: An data analyzing tool developed on top of Apache Hadoop exclusively by Facebook initially to help their employees who didn’t know much coding but SQL. It works similar to SQL queries with backend map reduce running. This way, they can get the best of both worlds. Easy fun SQL and a powerful Hadoop capability.

HOW IS MONGO DB any different?

Like we all know how relational database is unsuitable for big data, Mongo DB offers less consistency for both structured and unstructured.

As the MONGO DB offers the same features such as faster access and scalability like Hadoop, it differs on the implementation compared to the traditional map reduce of Hadoop.

Btw, MONGO DB CAN WORK ON REAL TIME PROCESSING FOR DATA WHICH HADOOP IS NOT ORIGINALLY DESIGNED FOR.

Judgment: Right now, Hadoop is meant for post-transaction and analytical part of massive data whereas Mongo DB suits well for transactions of big data in real time. The real winner would be the one when it can do both.

Wait, I bet something is already in the works for that.